EMP radiation from nuclear space bursts in 1962

Above: the masters degree thesis by Louis W. Seiler, Jr., A Calculational Model for High Altitude EMP, report ADA009208, computes these curves for the peak EMP at ground zero for a burst above the magnetic equator, where the Earth's magnetic field is far weaker than it is at high latitudes (nearer the poles) where magnetic field lines converge (increasing the magnetic field strength). The discoverer of the magnetic dipole EMP mechanism, Longmire, states in another report that the peak EMP is almost directly proportional to the transverse component of the Earth's magnetic field across the radial line from the bomb to the observer. Seiler shows that the peak EMP is almost directly proportional to strength of the Earth's magnetic field: the curves above apply to 0.3 Gauss magnetic field strength, which is the weak field at the equator (the 1962 American tests over Johnston Island were nearer the equator). Over North America, Europe or Russia, peak EMP fields would be doubled those in the diagram above, due to the Earth's stronger magnetic field of around 0.5 Gauss, which deflects Compton electrons more effectively, causing more of their kinetic energy to be converted into EMP energy than in the 0.3 Gauss field over Johnston Island in the 1962 American tests. If you look at the curves above, you see that the peak EMP is only a weak function of the gamma ray output of the weapon (the peak EMP increases by just a factor of 5, from roughly 10 kV/m to 50 kV/m, as prompt gamma ray output rises by a factor of 10,000, i.e. from 0.01 to 100 kt); it is far less than directly proportional to yield. Seiler also shows that large two-stage thermonuclear weapons will often produce a smaller peak EMP than a single stage fission bomb, because of "pre-ionization" of the atmosphere by X-rays and gamma rays from the first stage, which ionize the air, making it electrically conductive so that free electrons and ions almost immediately short out the Compton current from the larger secondary stage, negating most the EMP that would otherwise occur.

Above: the declassified principles involved in enhanced EMP nuclear weapons are very simple and obvious. Materials are selected to maximize the prompt gamma radiation that comes from the inelastic scatter of high-energy fusion neutrons, while a simple radiation shield around the fission primary stage part of the weapon averts the problem of the shorting-out of the final (fusion) stage EMP by fission primary stage pre-ionization of the atmosphere (which prevents most EMP-producing Compton currents, due to making the air so electrically conductive that it immediately shorts out secondary stage Compton currents). In the Starfish Prime test, the warhead was simply inverted before launch, so the fusion secondary stage prevented pre-ionization of the atmosphere by absorbing downward X-rays and gamma rays from the primary stage! In the film taken horizontally from a Hawaiian mountain top (above the local cloud cover), you can clearly see the primary stage of the Starfish Prime weapon being ejected upwards, out of the top, by the immense blast and radiation impulse which has been delivered to it due to the bigger explosion of the secondary (thermonuclear) stage. The primary stage of the bomb flies upwards into space, expanding as it does so, while the heavier secondary stage remains almost stationary below it (photo sequence below).

Philip J. Dolan's Capabilities of Nuclear Weapons, DNA-EM-1 chapter 7, page 7-1 (change 1 page updates, 1978), report ADA955391, states that low yield pure fission bombs typically release 0.5% of their yield as prompt gamma rays, compared to only 0.1% in old high yield warhead designs with relative thick outer cases, like the 1.4 Mt STARFISH test in 1962. Furthermore, Northrop's 1996 handbook of declassified 1990s EM-1 data gives details on the prompt gamma ray output from four very different nuclear weapon designs, showing that the enhanced radiation warhead ("neutron bomb") releases 2.6% of its total yield in the form of prompt gamma rays, which is mainly because of the outer weapon casing which is designed to minimize radiation absorption, allowing as much as possible to escape. This gives an idea of the amount of enormous variation in the EMP potential of existing bomb designs. About 3.5% of the energy of fission is prompt gamma rays, and neutrons exceeding 0.5 MeV energy undergo inelastic neutron scatter with heavy nuclei (such as iron and uranium), leaving the nuclei excited isomers that release further prompt gamma rays.

Thus, low yield bombs at somewhat lower altitudes than 400 km can produce peak EMP fields that exceed those from the 1962 high altitude thermonuclear tests, while still affecting vast areas. Single stage (fission) weapons in some cases produce a larger EMP than high-yield two-stage thermonuclear weapons, mentioned above. Weapon designs that use a minimal tamper, a minimal shell of TNT for implosion, or a linear implosion system, and a minimal outer casing, can maximise the fraction of the prompt gamma rays which escape from the weapon, enhancing the EMP. Hence, a low yield fission device could easily produce a peak (VHF to UHF) EMP effect on above ground cables similar to the 1962 STARFISH test (although the delayed very low intensity MHD-EMP ELF effects penetrating through the earth into underground cables would be weaker, since the MHD-EMP is essentially dependent upon the total fission yield of the weapon not prompt radiation output; MHD-EMP occurs as the fireball expands and as the ionized debris travels along the magnetic field lines, seconds to minutes after detonation).

Naïvely, by assuming that a constant fraction of the bomb energy is converted into EMP, textbook radio transmission theory suggests that the peak radiated EMP should then be proportional to the square root of the bomb energy and inversely proportional to the distance from the bomb. But in fact, as the graph above shows, this assumption is a misleading, false approximation: the fraction of bomb energy converted into the EMP is highly variable instead of being constant, suppressing much of the expected variation of peak EMP field strength with bomb energy. For weapons with a prompt gamma ray yield of 0.01-0.1 kt, the peak EMP on the ground decreases as the weapon is detonated at higher altitudes, from 60 to 300 km. But for prompt gamma ray yields approaching 100 kt, the opposite is true: the peak EMP at ground zero then rises as the burst altitude is increased from 60 to 300 km. What happens here is due to a change in the effective altitude from which the EMP is generated. The fraction of prompt gamma rays absorbed by any thickness of air is constant, but large outputs of prompt gamma rays will allow substantial EMP generation to occur over larger distances than smaller outputs. Hence, high yields are able to ionize and generate EMP within a larger vertical thickness of air (a bigger "deposition region" volume) than smaller yields.

For sufficiently large yields, this makes the peak EMP on the ground increase while the burst altitude is increased, despite the increasing distance between the ground and the bomb! This is because a large prompt gamma output is able to produce substantial EMP contributions from a bigger volume of air, effectively utilizing more of the increased volume of air between bomb and ground for EMP generation. This increasing deposition region size for higher yields increases the efficiency with which gamma ray energy is turned into EMP energy. Weapons with a lower output of prompt gamma rays produce a smaller effective "deposition region" volume for EMP production, concentrated at higher altitudes (closer to the bomb, where the gamma radiation is stronger), which is less effective in producing ground-level EMP.

Above: this comparison of the prompt gamma ray deposition regions for space bursts of 1 and 10 megatons total yield (i.e., 1 kt and 10 kt prompt gamma ray yield, respectively) in the 1977 Effects of Nuclear Weapons explains why the peak EMP at ground zero varies as Seiler's graph shows. In all cases (for burst heights of 50-300 km) the base of the deposition region is at an altitude of 8-10 km, but the height of the top of the deposition region is a function of bomb yield as well as burst altitude. The deposition region radius marks the region where the peak conductivity of the air (due to ionization by the nuclear radiation) is 10-7 S/m; inside this distance the air is conductive and the EMP is being produced by transverse (magnetic field-deflected) Compton electron currents, and is being limited by the air conductivity rise due to secondary electrons. Beyond this radius, the EMP is no longer being significantly produced or attenuated by secondary electrons, and the EMP thus propagates like normal radio waves (of similar frequency). The greater the vertical thickness of the deposition region between the bomb and the surface for a given yield, the greater the EMP intensity. Thus, for the 1 megaton burst shown, the vertical height of the deposition region above ground zero reaches:

62 km altitude for 50 km burst height

84 km altitude for 100 km burst height

74 km altitude for 200 km burst height, and

67 km altitude for 300 km burst height

Hence, the 100 km burst height maximises the thickness of the prompt gamma ray deposition region above ground zero, and maximises the EMP for that 1 megaton yield. (For 1 megaton burst altitudes above 100 km, the inverse square law of radiation reduces the intensity of the prompt gamma rays hitting the atmosphere sufficiently to decrease the deposition region top altitude.) For the 10 megaton yield, the extra yield is sufficient to extend the size of the deposition region to much greater sizes and enable it to continue increasing vertically aboveground zero as the burst height is increased to 200 km, where it reaches an altitude of 85 km, falling to 79 km for 300 km burst altitude. The extra thickness of the deposition layer enables a greater EMP because the small fraction of the EMP generated in the lowest density air at the highest altitudes, above 70 km or so, suffers the smallest conduction current attenuation (EMP shorting by secondary electrons severely increases with increasing air density, at lower altitudes), so it boosts the total EMP strength at ground zero.

Honolulu Advertiser newspaper article dated 9 July 1962 (local time):

'The street lights on Ferdinand Street in Manoa and Kawainui Street in Kailua went out at the instant the bomb went off, according to several persons who called police last night.'

New York Herald Tribune (European Edition), 10 July 1962, page 2:

'Electrical Troubles in Hawaii'In Hawaii, burglar alarms and air-raid sirens went off at the time of the blast.'

EMP effects data is given in the Report of the Commission to Assess the Threat to the United States from Electromagnetic Pulse (EMP) Attack, CRITICAL NATIONAL INFRASTRUCTURES, April 2008:

Page 18: “The Commission has concluded that even a relatively modest-to-small yield weapon of particular characteristics, using design and fabrication information already disseminated through licit and illicit means, can produce a potentially devastating E1 [prompt gamma ray caused, 10-20 nanoseconds rise time] field strength over very large geographical regions.”

Page 27: “There are about 2,000 ... transformers rated at or above 345 kV in the United States with about 1 percent per year being replaced due to failure or by the addition of new ones. Worldwide production capacity is less than 100 units per year and serves a world market, one that is growing at a rapid rate in such countries as China and India. Delivery of a new large transformer ordered today is nearly 3 years, including both manufacturing and transportation. An event damaging several of these transformers at once means it may extend the delivery times to well beyond current time frames as production is taxed. The resulting impact on timing for restoration can be devastating. Lack of high voltage equipment manufacturing capacity represents a glaring weakness in our survival and recovery to the extent these transformers are vulnerable.”

Pages 30-31: “Every generator requires a load to match its electrical output as every load requires electricity. In the case of the generator, it needs load so it does not overspin and fail, yet not so much load it cannot function. ... In the case of EMP, large geographic areas of the electrical system will be down, and there may be no existing system operating on the periphery for the generation and loads to be incrementally added with ease. ... In that instance, it is necessary to have a “black start”: a start without external power source. Coal plants, nuclear plants, large gas- and oil-fired plants, geothermal plants, and some others all require power from another source to restart. In general, nuclear plants are not allowed to restart until and unless there are independent sources of power from the interconnected transmission grid to provide for independent shutdown power. This is a regulatory requirement for protection rather than a physical impediment. What might be the case in an emergency situation is for the Government to decide at the time.”

Page 33: “Historically, we know that geomagnetic storms ... have caused transformer and capacitor damage even on properly protected equipment.”

Page 42: “Probably one of the most famous and severe effects from solar storms occurred on March 13, 1989. On this day, several major impacts occurred to the power grids in North America and the United Kingdom. This included the complete blackout of the Hydro-Quebec power system and damage to two 400/275 kV autotransformers in southern England. In addition, at the Salem nuclear power plant in New Jersey, a 1200 MVA, 500 kV transformer was damaged beyond repair when portions of its structure failed due to thermal stress. The failure was caused by stray magnetic flux impinging on the transformer core. Fortunately, a replacement transformer was readily available; otherwise the plant would have been down for a year, which is the normal delivery time for larger power transformers. The two autotransformers in southern England were also damaged from stray flux that produced hot spots, which caused significant gassing from the breakdown of the insulating oil.”

Page 45: “It is not practical to try to protect the entire electrical power system or even all high value components from damage by an EMP event. There are too many components of too many different types, manufactures, ages, and designs. The cost and time would be prohibitive. Widespread collapse of the electrical power system in the area affected by EMP is virtually inevitable after a broad geographic EMP attack ...”

Page 88: “The electronic technologies that are the foundation of the financial infrastructure are potentially vulnerable to EMP. These systems also are potentially vulnerable to EMP indirectly through other critical infrastructures, such as the power grid and telecommunications.”

Page 110: “Similar electronics technologies are used in both road and rail signal controllers. Based on this similarity and previous test experience with these types of electronics, we expect malfunction of both block and local railroad signal controllers, with latching upset beginning at EMP field strengths of approximately 1 kV/m and permanent damage occurring in the 10 to 15 kV/m range.”

Page 112: “Existing data for computer networks show that effects begin at field levels in the 4 to 8 kV/m range, and damage starts in the 8 to 16 kV/m range. For locomotive applications, the effects thresholds are expected to be somewhat higher because of the large metal locomotive mass and use of shielded cables.”

Page 115: “We tested a sample of 37 cars in an EMP simulation laboratory, with automobile vintages ranging from 1986 through 2002. ... The most serious effect observed on running automobiles was that the motors in three cars stopped at field strengths of approximately 30 kV/m or above. In an actual EMP exposure, these vehicles would glide to a stop and require the driver to restart them. Electronics in the dashboard of one automobile were damaged and required repair. ... Based on these test results, we expect few automobile effects at EMP field levels below 25 kV/m. Approximately 10 percent or more of the automobiles exposed to higher field levels may experience serious EMP effects, including engine stall, that require driver intervention to correct.”

Page 116: “Five of the 18 trucks tested did not exhibit any anomalous response up to field strengths of approximately 50 kV/m. Based on these test results, we expect few truck effects at EMP field levels below approximately 12 kV/m. At higher field levels, 70 percent or more of the trucks on the road will manifest some anomalous response following EMP exposure. Approximately 15 percent or more of the trucks will experience engine stall, sometimes with permanent damage that the driver cannot correct.”

Page 153: “Results indicate that some computer failures can be expected at relatively low EMP field levels of 3 to 6 kilovolts per meter (kV/m). At higher field levels, additional failures are likely in computers, routers, network switches, and keyboards embedded in the computer-aided dispatch, public safety radio, and mobile data communications equipment. ... none of the radios showed any damage with EMP fields up to 50 kV/m. While many of the operating radios experienced latching upsets at 50 kV/m field levels, these were correctable by turning power off and then on.”

Page 161: “In 1957, N. Christofilos at the University of California Lawrence Radiation Laboratory postulated that the Earth’s magnetic field could act as a container to trap energetic electrons liberated by a high-altitude nuclear explosion to form a radiation belt that would encircle the Earth. In 1958, J. Van Allen and colleagues at the State University of Iowa used data from the Explorer I and III satellites to discover the Earth’s natural radiation belts (J. A. Van Allen, and L. A. Frank, “Radiation Around the Earth to a Radial Distance of 107,400 km,” Nature, v183, p430, 1959). ... Later in 1958, the United States conducted three low-yield ARGUS high-altitude nuclear tests, producing nuclear radiation belts detected by the Explorer IV satellite and other probes. In 1962, larger tests by the United States and the Soviet Union produced more pronounced and longer lasting radiation belts that caused deleterious effects to satellites then in orbit or launched soon thereafter.”

Above: USSR Test ‘184’ on 22 October 1962, ‘Operation K’ (ABM System A proof tests) 300-kt burst at 290-km altitude near Dzhezkazgan. Prompt gamma ray-produced EMP induced a current of 2,500 amps measured by spark gaps in a 570-km stretch of 500 ohm impedance overhead telephone line to Zharyq, blowing all the protective fuses. The late-time MHD-EMP was of low enough frequency to enable it to penetrate the 90 cm into the ground, overloading a shallow buried lead and steel tape-protected 1,000-km long power cable between Aqmola and Almaty, firing circuit breakers and setting the Karaganda power plant on fire.

Above: USSR Test ‘184’ on 22 October 1962, ‘Operation K’ (ABM System A proof tests) 300-kt burst at 290-km altitude near Dzhezkazgan. Prompt gamma ray-produced EMP induced a current of 2,500 amps measured by spark gaps in a 570-km stretch of 500 ohm impedance overhead telephone line to Zharyq, blowing all the protective fuses. The late-time MHD-EMP was of low enough frequency to enable it to penetrate the 90 cm into the ground, overloading a shallow buried lead and steel tape-protected 1,000-km long power cable between Aqmola and Almaty, firing circuit breakers and setting the Karaganda power plant on fire.

In December 1992, the U.S. Defence Nuclear Agency spent $288,500 on contracting 200 Russian scientists to produce a 17-chapter analysis of effects from the Soviet Union’s nuclear tests, which included vital data on three underwater nuclear tests in the arctic, as well three 300 kt high altitude tests at altitudes of 59-290 km over Kazakhstan. In February 1995, two of the military scientists, from the Russian Central Institute of Physics and Technology, lectured on the electromagnetic effects of nuclear tests at Lawrence Livermore National Laboratory. The Soviet Union had first suffered electromagnetic pulse (EMP) damage to electronic blast instruments in their 1949 test. Their practical understanding of EMP damage eventually led them, on Monday 22 October 1962, to detonate a 300 kt missile-carried thermonuclear warhead at an altitude of 300 km (USSR test 184). That was at the very height of the Cold War and the test was detected by America: at 7 pm that day, President John F. Kennedy, in a live TV broadcast, warned the Soviet Union’s Premier Khrushchev of nuclear war if a nuclear missile was launched against the West, even by an accident: ‘It shall be the policy of this nation to regard any nuclear missile launched from Cuba against any nation in the Western hemisphere as an attack by the Soviet Union on the United States, requiring a full retalitory response upon the Soviet Union.’ That Russian space missile nuclear test during the Cuban missiles crisis deliberately instrumented the civilian power infrastructure of populated areas, unwarned, in Kazakhstan to assess EMP effects on a 570 km long civilian telephone line and a 1,000 km civilian electric power cable! This test produced the worst effects of EMP ever witnessed (the more widely hyped 1.4 Mt, 400 km burst STARFISH EMP effects were trivial by comparison, because of the weaker natural magnetic field strength at Johnston Island). The bomb released 1025 MeV of prompt gamma rays (0.13% of the bomb yield). The 550 km East-West telephone line was 7.5 m above the ground, with amplifiers every 60 km. All of its fuses were blown by the induced peak current, which reached 2-3 kA at 30 microseconds, as indicated by the triggering of gas discharge tubes. Amplifiers were damaged, and lightning spark gaps showed that the potential difference reached 350 kV. The 1,000 km long Aqmola-Almaty power line was a lead-shielded cable protected against mechanical damage by spiral-wound steel tape, and buried at a depth of 90 cm in ground of conductivity 10-3 S/m. It survived for 10 seconds, because the ground attenuated the high frequency field, However, it succumbed completely to the low frequency EMP at 10-90 seconds after the test, since the low frequencies penetrated through 90 cm of earth, inducing an almost direct current in the cable, that overheated and set the power supply on fire at Karaganda, destroying it. Cable circuit breakers were only activated when the current finally exceeded the design limit by 30%. This limit was designed for a brief lightning-induced pulse, not for DC lasting 10-90 seconds. By the time they finally tripped, at a 30% excess, a vast amount of DC energy had been transmitted. This overheated the transformers, which are vulnerable to short-circuit by DC. Two later 300 kt Soviet Union space tests, with similar yield but low altitudes down to 59 km, produced EMPs which damaged military generators.

Above: the STARFISH (1.4 Mt, 400 km detonation altitude, 9 July 1962) detonation, seen from a mountain above the low-level cloud cover on Maui, consisted of a luminous debris fireball expanding in the vacuum of space with a measured initial speed of 2,000 km/sec. (This is 0.67% of the velocity of light and is 179 times the earth's escape velocity. Compare this to the initial upward speed of only 6 times earth's escape velocity, achieved by the 10-cm thick, 1.2 m diameter steel cover blown off the top of the 152 m shaft of the 0.3 kt Plumbbob-Pascal B underground Nevada test on 27 August 1957. In that test, a 1.5 m thick 2 ton concrete plug immediately over the bomb was pushed up the shaft by the detonation, knocking the welded steel lid upward. This was a preliminary experiment by Dr Robert Brownlee called 'Project Thunderwell', which ultimately aimed to launch spacecraft using the steam pressure from deep shafts filled with water, with a nuclear explosion at the bottom; an improvement of Jules Verne's cannon-fired projectile described in De la Terre à la Lune, 1865, where steam pressure would give a more survivable gentle acceleration than Verne's direct impulse from an explosion. Some 90% of the radioactivity would be trapped underground.) The film: 'shows the expansion of the bomb debris from approximately 1/3 msec to almost 10 msec. The partition of the bomb debris into two parts ... is shown; in particular the development of the "core" into an upwards mushroomlike expansion configuration is seen clearly. The fast moving fraction takes the shape of a thick disc. Also the interaction of the bomb debris with the booster at an apparent distance (projected) of approximately 1.5 km is shown.' (Page A1-38 of the quick look report.)

In this side-on view the fireball expansion has a massive vertical asymmetry due to the effects of the device orientation (the dense upward jetting is an asymmetric weapon debris shock wave, due to the missile delivery system and/or the fact that the detonation deliberately occurred with 'the primary and much of the fusing and firing equipment' vertically above the fusion stage, see page A1-7 of the quick look technical report linked here): 'the STARFISH test warhead was inverted prior to the high-altitude test over Johnston Island in 1962 because of concerns that some masses within the design would cause an undesirable shadowing of prompt gamma rays and mask selected nuclear effects that were to be tested.' (April 2005 U.S. Department of Defense Report of the Defense Science Board Task Force on Nuclear Weapon Effects Test, Evaluation, and Simulation, page 29.). The earth's magnetic field also played an immediate role in introducing asymmetric fireball expansion as seen from Maui: 'the outer shell of expanding bomb materials forms ... at ... 1/25 to 1/10 sec, an elongated ellipsoidal shape with the long axis orientated along the magnetic field lines.' (Page A1-12 of the quick look report.)

The STARFISH test as filmed from Johnston Island with a camera pointing upwards could not of course show the vertical asymmetry, but it did show that the debris fireball: 'separated into two parts ... the central core which expands rather slowly and ... an outer spherically expanding shell ... The diameter of the expanding shell is approximately 2 km at 500 microseconds ...' (William E. Ogle, Editor, A 'Quick Look' at the Technical Results of Starfish Prime, August 1962, report JO-600, AD-A955411, originally secret-restricted data, p. A1-7.) Within 0.04-0.1 second after burst, the outer shell - as filmed from Maui in the Hawaiian Islands, had become elongated along the earth's magnetic field, creating an ellipsoid-shaped fireball. Visible 'jetting' of radiation up and southward was observed from the debris fireball at 20-50 seconds, and some of these jets are visible in the late time photograph of the debris fireball at 3 minutes after burst (above right).

The analysis of STARFISH on the right was done by the Nuclear Effects Group at the Atomic Weapons Establishment, Aldermaston, and was briefly published on their website, with the following discussion of the 'patch deposition' phenomena which applied to bursts above 200 km: 'the expanding debris compresses the geomagnetic field lines because the expansion velocity is greater than the Alfven speed at these altitudes. The debris energy is transferred to air ions in the resulting region of tightly compressed magnetic field lines. Subsequently the ions, charge-exchanged neutrals, beta-particles, etc., escape up and down the field lines. Those particles directed downwards are deposited in patches at altitudes depending on their mean free paths. These particles move along the magnetic field lines, and so the patches are not found directly above ground zero. Uncharged radiation (gamma-rays, neutrons and X-rays) is deposited in layers which are centered directly under the detonation point. The STARFISH event (1.4 megatons at 400 km) was in this altitude regime. Detonations at thousands of kilometres altitude are contained purely magnetically. Expansion is at less than the local Alfven speed, and so energy is radiated as hydromagnetic waves. Patch depositions are again aligned with the field lines.'

The Atomic Weapons Establishment site also showed a Monte Carlo model of STARFISH radiation belt development, indicating that the electron belt stretched a third of the way around the earth's equator at 3 minutes, and encircled the earth at 10 minutes. The averaged beta particle radiation flux in the belt was 2 x 1014 electrons per square metre per second at 3 minutes after burst, falling to a quarter of that at 10 minutes. As the time goes on, the radiation belt pushes up to higher altitudes and becomes more concentrated over the magnetic equator. For the first 5 minutes, the radiation belt has an altitude range of about 200-400 km and spans from 27 degrees south of the magnetic equator to 27 degrees north of it. At 1 day after burst, the radiation belt height has increased to the 600-1,100 km zone and the average flux is then 1.5 x 1012 electrons/m2/sec. At 4 months the altitude for this average flux (plus or minus a factor of 4) is confined to altitudes of 1,100-1,500 km, and it is covering a smaller latitude range around the magnetic equator, from about 20 degrees north to about 20 degrees south. At 95 years after burst, the remaining electrons will be 2,000 km above the magnetic equator, the latitude range will be only plus or minus 10 degrees from the equator, and the shell will only be 50 km thick.

Update: John B. Cladis, et al., “The Trapped Radiation Handbook”, Lockheed Palo Alto Research Laboratory, California, December 1971, AD-738841, Defense Nuclear Agency report DNA 2524H, 746 pages, is available online as a 57 MB PDF download linked here. (The key pages of nuclear test data, under 1 MB download, are linked here.) Page changes (updates) 3-5 separately available: change 3 (254 pages, 1974), change 4 (137 pages, 1977), and change 5 (102 pages, 1977).

This handbook discusses the Earth’s magnetic field trapping mechanism for electrons emitted by a nuclear explosion at high altitude or in outer space, including some unique satellite measured maps (Figures 6-15 and 6-16) of the trapped electron radiation belts created by 1.4 Mt American nuclear test at 400 km altitude on 9 July 1962, Starfish (Injun 1 data for 10 hours after burst and Telstar data for 48 hours). In addition, the handbook includes Telstar satellite measured maps of the trapped radiation shells for the 300 kt Russian tests at 290 and 150 km altitude on 22 and 28 October 1962 (Figures 6-23 and 6-24). The Russian space bursts were detonated at greater latitudes north than the Starfish burst that occurred almost directly over Johnston Island, more appropriate for the situation of high altitude burst over most potential targets. On page 6-39 the handbook concludes that 7.5 x 1025 electrons from Starfish (10 percent of its total emission) were initially trapped in the Earth’s magnetic field to form radiation belts in outer space (the rest were captured by the atmosphere). Page 6-54 concludes that the 300 kt, 290 km burst altitude 22 October 1962 Russian test had 3.6 x 1025 of its electrons trapped in the radiation belts, while the 300 kt, 150 km altitude shot on 28 October had only one-third as many of its electrons trapped, and the 300 kt, 59 km altitude burst on 1 November had only 1.2 x 1024 electrons trapped in space. So increasing the height of burst for a given yield greatly increased the percentage of the electrons trapped in radiation belts in space by the Earth’s magnetic field.

These data we give for the yields and burst heights for the 1962 Russian high altitude tests are the Russian data based on close-in accurate measurements and the yields of similar bombs under other conditions, released in 1995. The original American data on the Russian tests was relatively inaccurate since it was based on long-range EMP, air pressure wave, and trapped radiation belt measurements, but it has all recently been declassified by the CIA and is given in the CIA National Intelligence Estimate, July 2, 1963, on pages 43-44: "Joe 157" on 22 October 1962, "Joe 160" on 28 October and "Joe 168" on 1 November were initially assessed by America to be 200 kt, 200 kt and 1.8 Mt, detonated at altitudes of about 297 km, 167 km, and 93 km, respectively. As mentioned, the true yield was 300 kt in all cases and the true heights of burst were 290, 150 and 59 km. This is very interesting as it indicates how accurately the yield and burst altitude can be determined in the event of an unexpected nuclear test by an enemy, even with 1962 technology. The report also indicates that the Russians carefully scheduled their high altitude tests to be measured by their COSMOS XI satellite:

"A unique feature of all three 1962 high-altitude tests [by Russia] was the apparent planned use of a satellite to collect basic physical data. COSMOS XI passed over the burst point of JOE 157 within minutes of the detonation; it was at the antipodal point for the JOE 160 test at the time of detonation; and it was near the magnetic conjugate point of the JOE 168 detonation at time of burst."

A very brief (11 pages, 839 kb) compilation of the key pages with the vital nuclear test data from the long Trapped Radiation Handbook is linked here. The rate at which the radiation belts diminished with time was slow and hard to measure accurately, and is best determined by computer Monte Carlo simulations like the AWRE code discussed in this post. If the altitude of the “mirror points” (where the Earth’s strong magnetic field strengths near the poles reflects back the spiralling electrons) dips into the atmosphere, electrons get stopped and captured by air molecules, instead of reflected back into space. Therefore, there is a leakage of electrons at the mirror points, if those points are at low enough altitudes.

When STARFISH was detonated: 'The large amount of energy released at such a high altitude by the detonation caused widespread auroras throughout the Pacific area, lasting in some cases as long as 15 minutes; these were observed on both sides of the equator. In Honolulu an overcast, nighttime sky was turned into day for 6 minutes (New York Times, 10 July 1962). Observers on Kwajalein 1,400 nautical miles (about 2,600 km) west reported a spectacular display lasting at least 7 minutes. At Johnston Island all major visible phenomena had disappeared by 7 minutes except for a faint red glow. The earth's magnetic field [measured at Johnston] also was observed to respond to the burst. ... On 13 July, 4 days after the shot, the U.K. satellite, Ariel, was unable to generate sufficient electricity to function properly. From then until early September things among the satellite designers and sponsors were "along the lines of the old Saturday matinee one-reeler" as the solar panels on several other satellites began to lose their ability to generate power (reference: The Artificial Radiation Belt, Defense Atomic Support Agency, 4 October 1962, report DASA-1327, page 2). The STARFISH detonation had generated large quantities of electrons that were trapped in the earth's magnetic field; the trapped electrons were damaging the solar cells that generated the power in the panels.' (Source: Defense Nuclear Agency report DNA-6040F, AD-A136820, pp. 229-30.)

Above: the conjugate region aurora from STARFISH, 4,200 km from the detonation, as seen from Tongatapu 11 minutes after detonation. (Reference: W. P. Boquist and J. W. Snyder, 'Conjugate Auroral Measurements from the 1962 U.S. High Altitude Nuclear Test Series, in Aurora and Airglow, B. M. McCormac, Ed., Reinhold Publishing Corp., 1967.) A debris aurora caused by fission product ions travelling along magnetic field lines to the opposite hemisphere requires a burst altitude above 150 km, and in the STARFISH test at 400 km some 40% of the fission products were transported south along the magnetic force field into the conjugate region (50% was confined locally and 10% escaped into space). The resulting colourful aurora was filmed at Tongatapu (21 degrees south) looking north, and it was also seen looking south from Samoa (14 degrees south). The STARFISH debris reached an altitude of about 900-km when passing over the magnetic equator. The debris in the conjugate region behaves like the debris remaining in the burst locale; over the course of 2 hours following detonation, it simply settles back down along the Earth’s magnetic field lines to an altitude of 200 km (assuming a burst altitude exceeding 85 km). Hence, the debris is displaced towards the nearest magnetic pole. The exact ‘offset distance’ depends simply upon the angle of the Earth’s magnetic field lines. The ionisation in the debris region is important since it can disrupt communications if the radio signals need to pass through the region to reach an orbital satellite, and also because it may disrupt radar systems from spotting incoming warheads (since radar beams are radio signals which are attenuated).

In the Pacific nuclear high altitude megaton tests, communications using ionosphere-reflected high frequency (HF) radio were disrupted for hours at both ends of the geomagnetic field lines which passed through the detonation point. However, today HF is obsolete and the much higher frequencies involved do not suffer so much attenuation. Instead of relying on the ionosphere and conducting ocean to form a reflecting wave-guide for HF radio, the standard practice today is to use microwave frequencies that penetrate right through the normal ionosphere and are beamed back to another area by an orbital satellite. These frequencies can still be attenuated by severe ionisation from a space burst, but the duration of disruption will be dramatically reduced to seconds or minutes.

‘Recently analyzed beta particle and magnetic field measurements obtained from five instrumented rocket payloads located around the 1962 Starfish nuclear burst are used to describe the diamagnetic cavity produced in the geomagnetic field. Three of the payloads were located in the cavity during its expansion and collapse, one payload was below, and the fifth was above the fully expanded cavity. This multipoint data set shows that the cavity expanded into an elongated shape 1,840 km along the magnetic field lines and 680 km vertically across in 1.2 s and required an unexpectedly long time of about 16 s to collapse. The beta flux contained inside the cavity was measured to be relatively uniform throughout and remained at 3 × 1011 beta particles/cm2 s for at least 7 s. The plasma continued to expand upward beyond the fully expanded cavity boundary and injected a flux measuring 2.5 × 1010 beta particles/cm2 s at H + 34 s into the most intense region of the artificial belt. Measured 10 hours later by the Injun I spacecraft, this flux was determined to be 1 × 109 beta particles/cm2 s.’ - Palmer Dyal, ‘Particle and field measurements of the Starfish diamagnetic cavity’, Journal of Geophysical Research, volume 111, issue A12, page 211 (2006).

Palmer Dyal was the nuclear test Project Officer and co-author with W. Simmons of Operation DOMINIC, FISH BOWL Series, Project 6.7, Debris Expansion Experiment, U.S. Air Force Weapons Laboratory, Kirkland Air Force Base, New Mexico, POR-2026 (WT-2026), AD-A995428, December 1965:

'This experiment was designed to measure the interaction of expanding nuclear weapon debris with the ion-loaded geomagnetic field. Five rockets on STARFISH and two rockets on CHECKMATE were used to position instrumented payloads at various distances around the burst points. The instruments measured the magnetic field, ion flux, beta flux, gamma flux, and the neutron flux as a function of time and space around the detonations. Data was transmitted at both real and recorded times to island receiving sites near the burst regions. Measurements of the telemetry signal strengths at these sites allowed observations of blackout at 250 MHz ... the early expansion of the STARFISH debris probably took the form of an ellipsoid with its major axis oriented along the earth's magnetic field lines. Collapse of the magnetic bubble was complete in approximately 16 seconds, and part of the fission fragment beta particles were subsequently injected into trapped orbits. ...

‘At altitudes above 200 kilometres ... the particles travel unimpeded for several thousands of kilometres. During the early phase of a high-altitude explosion, a large percentage of the detonation products is ionized and can therefore interact with the geomagnetic field and can also undergo Coulomb scattering with the ambient air atoms. If the expansion is high enough above the atmosphere, an Argus shell of electrons can be formed as in the 1958 and 1962 test series. ... If this velocity of the plasma is greater than the local sound or Alfven speed, a magnetic shock similar to a hydro shock can be formed which dissipates a sizable fraction of the plasma kinetic energy. The Alfven velocity is C = B/(4*{Pi}*{Ion density, in ions per cubic metre})1/2, where ... B is the magnetic field ... Since the STARFISH debris expansion was predicted and measured to be approximately 2 x 108 cm/sec and the Alfven velocity is about 2 x 107 cm/sec, a shock should be formed. A consideration of the conservation of momentum and energy indicates that the total extent of the plasma expansion proceeds until the weapon plasma kinetic energy is balanced by the B2/(8{Pi}) magnetic field energy [density] in the excluded region and the energy of the air molecules picked up by the expanding debris. ... An estimate of the maximum radial extent of the STARFISH magnetic bubble can be made assuming conservation of momentum and energy. The magnetic field swept along by the plasma electrons will pick up ambient air ions as it proceeds outward. ...’

Conservation of momentum suggests that the initial outward bomb momentum, MBOMBVBOMB must be equal to the momentum of the total expanding fireball after it has picked up air ions of mass MAIR IONS:

MBOMBVBOMB = (MBOMB + MAIR IONS)V,

where V is the velocity of the combined shell of bomb and air ions. The expansion of the ionized material against the earth’s magnetic field slows it down, so that the maximum radial extent occurs when the initial kinetic energy E = (1/2) MBOMBVBOMB2 has been converted into the potential energy density of the magnetic field which stops its expansion. The energy of the magnetic field excluded from the ionized shell of radius R is simply the volume of that shell multiplied by the magnetic field energy density B2/(8{Pi}). By setting the energy of the magnetic field bubble equal to the kinetic energy of the explosion, the maximum size of the bubble could be calculated, assuming the debris was 100% ionized.

For CHECKMATE, they reported: ‘Expansion of the debris was mostly determined by the surrounding atmosphere which had a density of 4.8 x 1010 particles/cm3.

Richard L. Wakefield's curve above, although it suffers from many instrument problems, explained EMP damage on Hawaii some 1,300 km from the burst point - see map below. Dr Longmire explained Wakefield's curve by a brand new EMP theory called the 'magnetic dipole mechanism' - a fancy name for the deflection at high altitudes of electrons by the Earth's natural magnetic dipole field. The original plan for the Starfish test is declassified here, and the first report on the effects is declassified here. The zig-zag on the measured curve above is just 'ringing' in the instrument, not in the EMP. The inductance, capacitance, and resistance combination of the electronic circuit in the oscilloscope used to measure the EMP evidently had a natural resonance - rather like a ringing bell - at a frequency of 110 MHz, which was set off by the rapid rise in the first part of the EMP and continued oscillating for more than 500 ns. The wavy curve from the instrument is thus superimposed on the real EMP.

Above: raw data released by America so far on the Starfish EMP consists of the graph on the left based on a measurement by Richard L. Wakefield in a C-130 aircraft 1,400 km East-South-East of the detonation, with a CHAP (code for high altitude pulse) Longmire computer simulation for that curve both with and without instrument response corrections (taken from Figure 9 of the book EMP Interaction, online edition), and the graph on the right which is Longmire's CHAP calculation of the EMP at Honolulu, 1,300 km East-North-East of the detonation (page 7 of Longmire's report EMP technical note 353, March 1985). By comparing the various curves, you can guess the correct scales for the graph on the left and also what the time-dependent instrument response is. Above: locations of test aircraft which suffered EMP damage during Operation Fishbowl in 1962. In testimony to 1997 U.S. Congressional Hearings on EMP, Dr. George W. Ullrich, the Deputy Director of the U.S. Department of Defense's Defense Special Weapons Agency (now the DTRA, Defence Threat Reduction Agency) said that the lower-yield Fishbowl tests after Starfish 'produced electronic upsets on an instrumentation aircraft that was approximately 300 kilometers away from the detonations.' The report by Charles N. Vittitoe, 'Did high-altitude EMP (electromagnetic pulse) cause the Hawaiian streetlight incident?', Sandia National Labs., Albuquerque, NM, report SAND-88-0043C; conference CONF-880852-1 (1988) states on page 3: 'Several damage effects have been attributed to the high-altitude EMP. Tesche notes the input-circuit troubles in radio receivers during the Starfish [1.4 Mt, 400 km altitude] and Checkmate [7 kt, 147 km altitude] bursts; the triggering of surge arresters on an airplane with a trailing-wire antenna during Starfish, Checkmate, and Bluegill [410 kt, 48 km altitude] ...'

Above: locations of test aircraft which suffered EMP damage during Operation Fishbowl in 1962. In testimony to 1997 U.S. Congressional Hearings on EMP, Dr. George W. Ullrich, the Deputy Director of the U.S. Department of Defense's Defense Special Weapons Agency (now the DTRA, Defence Threat Reduction Agency) said that the lower-yield Fishbowl tests after Starfish 'produced electronic upsets on an instrumentation aircraft that was approximately 300 kilometers away from the detonations.' The report by Charles N. Vittitoe, 'Did high-altitude EMP (electromagnetic pulse) cause the Hawaiian streetlight incident?', Sandia National Labs., Albuquerque, NM, report SAND-88-0043C; conference CONF-880852-1 (1988) states on page 3: 'Several damage effects have been attributed to the high-altitude EMP. Tesche notes the input-circuit troubles in radio receivers during the Starfish [1.4 Mt, 400 km altitude] and Checkmate [7 kt, 147 km altitude] bursts; the triggering of surge arresters on an airplane with a trailing-wire antenna during Starfish, Checkmate, and Bluegill [410 kt, 48 km altitude] ...'

Below are the prompt EMP waveforms measured in California, 5,400 km away from Starfish (1.4 Mt, 400 km altitude) and Kingfish (410 kt, 95 km altitude) space shots above Johnston Island in 1962:

It is surprising to find that on 11 January 1963, the American journal Electronics Vol. 36, Issue No. 2, had openly published the distant MHD-EMP waveforms from all five 1962 American high altitude detonations Starfish, Bluegill, Kingfish, Checkmate, and Tightrope: 'Recordings made during the high-altitude nuclear explosions over Johnston Island, from July to November 1962, shed new light on the electromagnetic waves associated with nuclear blasts. Hydrodynamic wave theory is used to explain the main part of the signal from a scope. The results recorded for five blasts are described briefly. The scopes were triggered about 30 micro-seconds before the arrival of the main spike of the electromagnefic pulse.'

Above: if we ignore the late-time MHD-EMP mechanism (which takes seconds to minutes to peak and has extremely low frequencies) there are three EMP mechanisms at play in determining the radiated EMP as a function of burst altitude. This diagram plots typical peak radiated EMP signals from 1 kt and 1 Mt bombs as a function of altitude for an observer at a fixed distance of 100 km from ground zero. For very low burst altitudes, the major cause of EMP radiation is the asymmetry due to the Earth's surface (there is net upward Compton current due to the ground absorbing downward-directed gamma rays). This is just like a vertical 'electric dipole' radio transmitter antenna radiating radio waves horizontally (at right angles to the direction of the time-varying current) when the vertical current supplied to the antenna is varied in time. Dolan's DNA-EM-1 states that a 1 Mt surface burst radiates a peak EMP of 1,650 v/m at 7.2 km distance (which falls off inversely with distance for greater distances). As the burst altitude is increased above about 1 km or so, this ground asymmetry mechanism becomes less important because the gamma rays take 1 microsecond to travel 300 metres and don't reach the ground with much intensity; in any case by that time the EMP has been emitted by another mechanism of asymmetry, the fall in air density with increasing altitude, which is particularly important for bursts of 1-10 km altitude. Finally, detonations above 10 km altitude send gamma rays into air of low density, so that the Compton electrons have the chance (before hitting air molecules!) to be deflected significantly by the Earth's magnetic field; this 'magnetic dipole' deflection makes them emit synchrotronic radiation which is the massive EMP hazard from space bursts which was discovered by Dr Conrad Longmire after the Starfish test on 9 July 1962. After the Starfish EMP was measured by Richard Wakefield, the Americans started looking for 'magnetic dipole' EMP from normal megaton air bursts dropped from B-52 aircraft (at a few km altitude to prevent local fallout). Until then they measured EMP from air bursts using oscilloscopes set to measure EMP with durations of tens of microseconds. By increasing the sweep speed to sub-microsecond times (nanoseconds), they were then able to see the positive pulse of 'magnetic dipole' EMP even in sea level air bursts at relatively low altitude, typically peaking at 18 v/m at 70 nanoseconds for 20 km distance as in the following illustration from LA-2808:

Above: the long-duration, weak field electric-dipole EMP waveform due to vertical asymmetry from a typical air burst, measured 4,700 km from the Chinese 200 kt shot on 8 May 1966.

Above: the long-duration, weak field electric-dipole EMP waveform due to vertical asymmetry from a typical air burst, measured 4,700 km from the Chinese 200 kt shot on 8 May 1966.

Because of Nobel Laureate Dr Hans Bethe's errors in predicting the wrong EMP mechanism for high altitude bursts back in 1958 (he predicted the electric dipole EMP, neglecting both the magnetic dipole mechanism and the MHD/auroral EMP mechanisms), almost all the instruments were set to measure a longer and less intense EMP with a different polarization (vertical, not horizontal), and at best they only recorded vertical-looking spikes which went off-scale and provided zero information about the peak EMP. In 1958 tests Teak and Orange, there was hardly any information at all due to both this instrumentation problem and missile errors.

Above: the American 1.4 Mt Starfish test at 400-km, on 9 July 1962, induced large EMP currents in the overhead wires of 30 strings of Oahu streetlights, each string having 10 lights (300 streetlights in all). The induced current was sufficient to blow the fuses. EMP currents in the power lines set off ‘hundreds’ of household burglar alarms and opened many power line circuit breakers. On the island of Kauai, EMP closed down telephone calls to the other islands despite the 1962 sturdy relay (electromechanical) telephone technology, by damaging the microwave diode in the electronic microwave link used to connect the telephone systems between different Hawaiian islands (because of the depth of the ocean between the islands, the use of undersea cables was impractical). If the Starfish Prime warhead had been detonated over the northern continental United States, the magnitude of the EMP would have been about 2.4 times larger because of the stronger magnetic field over the USA which deflects Compton electrons to produce EMP, while the much longer power lines over the USA would pick up a lot more EMP energy than the short power lines in Hawaiian islands, and finally the 1962 commonplace electronic 'vacuum tubes' or 'triode valves' (used before transistors and microchips became common) which could survive 1-2 Joules of EMP, have now been completely replaced by modern semiconductor microchips which are millions of times times more sensitive to EMP (burning out at typically 1 microJoule of EMP energy or less), simply because they pack millions of times more components into the same space, so the over-heating problem is far worse for a very sudden EMP power surge (rising within a microsecond). Heat can't be dissipated fast enough so the microchip literally melts or burns up under EMP exposure, while older electronics can take a lot more punishment. So new electronics is a million times more vulnerable than in 1962.

'The time interval detectors used on Maui went off scale, probably due to an unexpectedly large electromagnetic signal ...' - A 'Quick Look' at the Technical Results of Starfish Prime, 1962, p. A1-27.

The illustration of Richard Wakefield's EMP measurement from the Starfish test is based on the unclassified reference is K. S. H. Lee's 1986 book, EMP Interaction. (The earlier, 1980, edition is now online here as a 28 MB download, and it contains the Starfish EMP data.) However, although that reference gives the graph data (including instrument-corrected data from an early computer study called ‘CHAP’ - Code for High Altitude Pulse, by Longmire in 1974), it omits the scales from the graph for the time and electric field, which need to be filled in from another graph declassified separately in Dolan's DNA-EM-1. Full calculations of EMP as a function of burst altitude are also online in pages 33 and 36 of Louis W. Seiler, Jr., A Calculational Model for High Altitude EMP, report AD-A009208, March 1975.

The recently declassified report on Starfish states that Richard L. Wakefield's measurement - the only one at the extremely high frequency that measured the peak EMP with some degree of success, was an attempt to measure the time-interval between the first and secondary stage explosions in the weapon (the fission primary produces one pulse of gamma rays, which subsides before the final thermonuclear stage signal). Wakefield's report title is (taken from page 44 of the declassified Starfish report):

Measurement of time interval from electromagnetic signal received in C-130 aircraft, 753 nautical miles from burst, at 11 degrees 16 minutes North, 115 degrees 7 minutes West, 24,750 feet.

There is really no wonder why it remains secret: the title alone tells you that you can measure not just the emission from the bomb but the internal functioning (the time interval between the primary fission stage and secondary thermonuclear stage!) of the bomb, just by photographing an oscilloscope with a suitable sweep speed, connected to an antenna, from an aircraft 1,400 km away flying at an altitude of 24,750 feet! The longitude of the measurement is clearly in error as it doesn't correspond to the stated distance from ground zero. Presumably there is a typing error and the C-130 was at 155 degrees 7 minutes West, not 115 degrees 7 minutes. This would put the position of Wakefield's C-130 some 800 km or so South of the Hawaiian islands at detonation time. The report also shows why all the other EMP measurements failed to measure the peak field: they were almost all made in the ELF and VLF frequency bands, corresponding to rise times in milliseconds and seconds, not nanoseconds. They were concentrating on measuring the magnetohydrodynamic (MHD) EMP due to the ionized fireball expansion displacing the Earth's magnetic field, and totally ignored the possibility of a magnetic dipole EMP from the deflection of Compton electrons by the Earth's magnetic field.

Notice that the raw data from Starfish - without correction for the poor response of the oscilloscope's aerial orientation and amplifier circuit to the EMP - indicates a somewhat lower peak electric field at a later time than the properly corrected EMP curve. The true peak was 5,210 v/m at 22 nanoseconds (if this scale is correct; notice that Longmire's reconstruction of the Starfish EMP at Honolulu using CHAP gave 5,600 v/m peaking at 100 ns). The late-time (MHD-EMP) data for Starfish shown is for the horizontal field and is available online in Figure 6 of the arXiv filed report here by Dr Mario Rabinowitz.

Dr Rabinowitz has also compiled a paper here, which quotes some incompetent political 'off the top of my head' testimony from clowns at hearings from the early 1960s, which suggests that Starfish Prime did not detonate over Johnston Island but much closer to Hawaii, but the burst position was accurately determined from theodolite cameras to be 16° 28' 6.32" N and 169° 37' 48.27" W (DASA-1251 which has been in the public domain since 1979 gives this, along with the differing exact burst positions of other tests; this is not the position of launch or an arbitrary point in Johnston Island but is the detonation point). The coordinates of Johnston Island launch area are 16° 44' 15" N and 169° 31' 26" W (see this site), so Starfish Prime occurred about 16 minutes (nautical miles) south of the launch pad and about 6 minutes (nautical miles) west of the launch pad, i.e., 32 km from the launch pad (this is confirmed on page 6 of the now-declassified Starfish report available online).

Hence, Starfish Prime actually detonated slightly further away from Hawaii than the launch pad, instead of much closer to Hawaii! The detonation point was around 32 km south-south-west of Johnston Island, as well as being 400 km up. It is however true as Rabinowitz records that the 300 streetlights fused in the Hawaiian Islands by Starfish were only 1-3% of the total number. But I shall have more to say about this later on, particularly after reviewing extensive Russian EMP experiences with long shallow-buried power lines and long overhead telephone lines which Dr Rabinowitz did not know about in 1987 when writing the critical report.

Above: EMP waveform for all times (logarithmic axes) and frequency spectra for a nominal high altitude detonation (P. Dittmer et al., DNA EMP Course Study Guide, Defense Nuclear Agency, DNA Report DNA-H-86-68-V2,

May 1986). The first EMP signal comes from the prompt gamma rays of fission and gamma rays released within the bomb due to the inelastic scatter of neutrons with the atoms of the weapon. For a fission weapon, about 3.5% of the total energy emerges as prompt gamma rays, and this is added to by the gamma rays due to inelastic neutron scatter in the bomb. But despite their high energy (typically 2 MeV), most of these gamma rays are absorbed by the weapons materials, and don't escape from the bomb casing. Typically only 0.1-0.5% of the bomb energy is actually radiated as prompt gamma rays (the lower figure applying to massive, old fashioned high-yield Teller-Ulam multimegaton thermonuclear weapons with thick outer casings, and the high figure to lightweight, low-yield weapons, with relatively thin outer casings). The next part of the EMP from a space burst comes from inelastic scatter of neutrons as they hit air molecules. Then, after those neutrons are slowed down a lot by successive inelastic scattering in the air (releasing gamma rays each time), they are finally captured by the nuclei of nitrogen atoms, which causes gamma rays to be emitted and a further EMP signal which adds to the gamma rays from decaying fission product debris. Finally, you get an EMP signal at 1-10 seconds from the magnetohydrodynamic (MHD) mechanism, where the ionized fireball expansion pushes out the earth's magnetic field (which can't enter an electrically-conductive, ionized region) with a frequency of less than 1 Hertz, and then the auroral motion of charged particles from the detonation (spiralling along the earth's magnetic field between conjugate points in opposite magnetic hemispheres) constitutes another motion of charge (i.e. an time-varying electric current) which sends out a final EMP at extremely low frequencies, typically 0.01 Hertz. These extremely low frequencies, unlike the high frequencies, can penetrate substantial depths underground, where they can induce substantial electric currents in very long (over 100 km long) buried cables.

Above: the late-time magnetohydrodynamic EMP (MHD-EMP) measured by the change in the natural magnetic field flux density as a function of time after American tests Starfish (1.4 Mt, 400 km burst altitude), Checkmate (7 kt, 147 km burst altitude) and Kingfish (410 kt, 95 km burst altitude) at Johnston Island, below the detonations. The first (positive) pulse in each case is due to the ionized (diamagnetic) fireball expanding and pushing out the earth's magnetic field, which cannot penetrate into a conductive cavity such as an ionized fireball. Consequently, the pushed-out magnetic field lines become bunched up outside the fireball, which means that the magnetic field intensity increases (the magnetic field intensity can be defined by the concentration of the magnetic field lines in space). Under the fireball - as in the case of the data above, measured at Johnston Island, which was effectively below the fireball in each case - there is a patch of ionized air caused by X-rays being absorbed from the explosion, and this patch shields in part the first pulse of MHD-EMP (i.e., that from the expansion of the fireball which pushes out the earth's magnetic field). The second (negative) pulse of the late-time EMP is bigger in the case of the Starfish test, because it is unshielded: this large negative pulse is simply due to the auroral effect of the ionized fireball rising and moving along the earth's magnetic field lines. This motion of ionized fission product debris constitutes a large varying electric current for a high yield burst like Starfish, and as a result of this varying current, the accelerating charges radiate an EMP signal which can peak at a minute or so after detonation.

Above: the measured late-time MHD-EMP at Hawaii, 1,500 km from the Starfish test, was stronger than at Johnston Island (directly below the burst!) because of the ionized X-ray patch of conductive air below the bomb, which shielded Johnston Island. The locations of these patches of ionized air below bursts at various altitudes are discussed in the blog post linked here.

Above: the measured late-time MHD-EMP at Hawaii, 1,500 km from the Starfish test, was stronger than at Johnston Island (directly below the burst!) because of the ionized X-ray patch of conductive air below the bomb, which shielded Johnston Island. The locations of these patches of ionized air below bursts at various altitudes are discussed in the blog post linked here.

Above: correlation of global measurements of the Starfish MHD-EMP late signal which peaked 3-5 seconds after detonation.

Above: correlation of global measurements of the Starfish MHD-EMP late signal which peaked 3-5 seconds after detonation.

The 3-stages of MHD-EMP:

- Expansion of ionized, electrically conducting fireball excludes and so pushes out Earth’s magnetic field lines, causing an EMP. This peaks within 10 seconds. However, the air directly below the detonation is ionized and heated by X-rays so that it is electrically conducting and thus partly shields the ground directly below the burst from the late-time low-frequency EMP.

- A MHD-EMP wave then propagates between the ionosphere’s F - layer and the ground, right around the planet.

- The final stage of the late-time EMP is due to the aurora effect of charged particles and fission products physically moving along the Earth’s magnetic field lines towards the opposite pole. This motion of charge constitutes a large time-varying electric current which emits the final pulse of EMP, which travels around the world.

MHD-EMP has serious effects for long conductors because its extremely low frequencies (ELF) can penetrate a lot further into the ground than higher frequencies can, as proved by its effect on a long buried power line during the nuclear test of a 300 kt warhead at 290 km altitude on 22 October 1962 near Dzhezkazgan in Kazakhstan (as part of some Russian ABM system proof tests). In this test, prompt gamma ray-produced EMP induced a current of 2,500 amps measured by spark gaps in a 570-km stretch of overhead telephone line out to Zharyq, blowing all the protective fuses. But the late-time MHD-EMP was of special interest because it was of low enough frequency to enable it to penetrate the 90 cm into the ground, overloading a shallow buried lead and steel tape-protected 1,000-km long power cable between Aqmola and Almaty, firing circuit breakers and setting the Karaganda power plant on fire. The Russian 300 kt test on 22 October 1962 at 290 km altitude (44,84º N, 66,05º E) produced an MHD-EMP magnetic field of 1025 nT measured at ground zero, 420 nT at 433 km, and 240 nT at 574 km distance. Along ground of conductivity 10-3 S/m, 400 v was induced in a cable 80 km long, implying an MHD-EMP of 5 v/km.

Above: the incendiary effects of a relatively weak but natural MHD-EMP from the geomagnetic solar storm of 13 March 1989 in saturating the core of a transformer in the Hydro-Quebec electric power grid. Hydro-Quebec lost electric power, cutting the supply of electricity to 6 million people for several hours, and it took 9 hours to restore 83% (21.5 GW) of the power supply (1 million people were still without electric power then). Two 400/275 kV autotransformers were also damaged in England:

'In addition, at the Salem nuclear power plant in New Jersey, a 1200 MVA, 500 kV transformer was damaged beyond repair when portions of its structure failed due to thermal stress. The failure was caused by stray magnetic flux impinging on the transformer core. Fortunately, a replacement transformer was readily available; otherwise the plant would have been down for a year, which is the normal delivery time for larger power transformers. The two autotransformers in southern England were also damaged from stray flux that produced hot spots, which caused significant gassing from the breakdown of the insulating oil.' - EMP Commission report, 'Critical National Infrastructures', 2008, page 42.

A study of these effects is linked here. Similar effects from the Russian 300 kt nuclear test at 290 km altitude over Dzhezkazgan in Kazakhstan on 22 October 1962 induced enough current in a 1,000 km long protected underground cable to burn the Karaganda power plant to the ground. Dr Lowell Wood testified on 8 March 2005 during Senate Hearings 109-30 that these MHD-EMP effects are: 'the type of damage which is seen with transformers in the core of geomagnetic storms. The geomagnetic storm, in turn, is a very tepid, weak flavor of the so-called slow component of EMP. So when those transformers are subjected to the slow component of the EMP, they basically burn, not due to the EMP itself but due to the interaction of the EMP and normal power system operation. Transformers burn, and when they burn, sir, they go and they are not repairable, and they get replaced, as you very aptly pointed out, from only foreign sources. The United States, as part of its comparative advantage, no longer makes big power transformers anywhere at all. They are all sourced from abroad. And when you want a new one, you order it and it is delivered - it is, first of all, manufactured. They don't stockpile them. There is no inventory. It is manufactured, it is shipped, and then it is delivered by very complex and tedious means within the U.S. because they are very large and very massive objects. They come in slowly and painfully. Typical sort of delays from the time that you order until the time that you have a transformer in service are one to 2 years, and that is with everything working great. If the United States was already out of power and it suddenly needed a few hundred new transformers because of burnout, you could understand why we found not that it would take a year or two to recover, it might take decades, because you burn down the national plant, you have no way of fixing it and really no way of reconstituting it other than waiting for slow-moving foreign manufacturers to very slowly reconstitute an entire continent's worth of burned down power plant.'

MEASURED ELECTROMAGNETIC PULSE (E.M.P.) EFFECTS FROM SPACE TESTS

‘The British Government and our scientists have … been kept fully informed ... the fall-out from these very high-altitude tests is negligible ... the purpose of this experiment is of the greatest importance from the point of view of defence, for it is intended to find out how radio, radar, and other communications systems on which all defence depends might be temporarily put out of action by explosions of this kind.’ – British Prime Minister Harold Macmillan, Statement to the House of Commons, 8 May 1962.

‘Detonations above about 130,000 feet [40 km] produce EMP effects on the ground … of sufficient magnitude to damage electrical and electronic equipment.’ – Philip J. Dolan, editor, Capabilities of Nuclear Weapons, U.S. Department of Defense, 1981, DNA-EM-1, c. 1, p. 19, originally ‘Secret – Restricted Data’ (declassified and released on 13 February 1989).

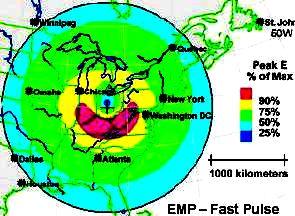

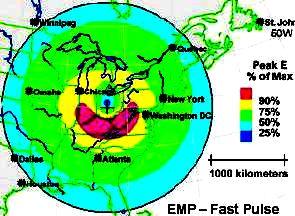

Above: area coverage by the first (fast or 'magnetic dipole mechanism') peak EMP and by the second (slow or 'magneto-hydrodynamic, MHD-EMP, mechanism') for a 10-20 kt single stage (pure fission) thin-cased burst at 150 km altitude. Both sets of contours are slightly disturbed from circles by the effect of the earth's slanting magnetic field (the burst is supposed to occur 500 km west of Washington D.C.). Notice that the horizon range for this 150 km burst height is 1,370 km and with the burst location shown that zaps 70 % of the electricity consumption of the United States, but if the burst height were 500 km then the horizon radius would be 2,450 km and would cover the entire United States of America. This distance is very important because the peak signal has a rise time of typically 20 ns, which implies a VHF frequency on the order of 50 MHz, which cannot extend past the horizon (although lower frequencies will obviously bounce off the ionosphere and refract and therefore extend past the horizon). However if you simply increase the burst altitude, you would then need a megaton explosion, to avoid diluting the energy and hence the effects by increasing the area coverage.

NOBEL LAUREATE FAILED TO PREDICT THE SEVERE EMP MECHANISM

In October 1957, Nobel Laureate Dr Hans A. Bethe's report, "Electromagnetic Signal Expected from High-Altitude Test" (Los Alamos Scientific Laboratory report LA-2173, secret-restricted data), predicted incorrectly that only a weak electromagnetic pulse (EMP) would be produced by a nuclear detonation in space or at very high altitude, due to vertical oscillations resulting from the downward-travelling hemisphere of radiation. This is the 'electric dipole' EMP mechanism and is actually a trivial EMP mechanism for high altitude bursts.

Hardtack-Teak, a 3.8 Mt, 50 % fission test on 1 August 1958 missile carried to 77 km directly over Johnston Island, gave rise to a powerful EMP, but close-in waveform measurements failed. This was partly due to an error in the missile which caused it to detonate above the island instead of 30 km down range as planned (forcing half a dozen filmed observers at the entrance to the control station to duck and cover in panic, see the official on-line U.S. Department of Energy test film clip), but mainly because of Bethe's false prediction that the EMP would be vertically polarised and very weak (on the order of 1 v/m). Due to Bethe's error, the EMP measurement oscilloscopes were set to excessive sensitivity which would have sent them immediately off-scale:

'The objective was to obtain and analyze the wave form of the electromagnetic (EM) pulse resulting from nuclear detonations, especially the high-altitude shots. ... Because of relocation of the shots, wave forms were not obtained for the very-high-altitude shots, Teak and Orange. During shots Yucca, Cactus, Fir, Butternut, Koa, Holly, and Nutmeg, the pulse was measured over the frequency range from 0 to 10 mega-cycles. ... Signals were picked up by short probe-type antennas, and fed via cathode followers and delay lines to high-frequency oscilloscopes. Photographs of the traces were taken at three sweep settings: 0.2, 2, and 10 micro-sec/cm.

'The shot characteristics were compared to the actual EM-pulse wave-form parameters. These comparisons showed that, for surface shots, the yield, range and presence of a second [fusion] stage can be estimated from the wave-form parameters. EM-pulse data obtained by this project is in good agreement with that obtained during Operation Redwing, Project 6.5.' - F. Lavicka and G. Lang, Operation Hardtack, Project 6.4, Wave Form of Electromagnetic Pulse from Nuclear Detonations, U.S. Army, weapon test report WT-1638, originally Secret - Restricted Data (15 September 1960).

However, the Apia Observatory at Samoa, 3,200 km from the Teak detonation, recorded the ‘sudden commencement’ of an intense magnetic disturbance – four times stronger than any recorded due to solar storms – followed by a visible aurora along the earth’s magnetic field lines (reference: A.L. Cullington, Nature, vol. 182, 1958, p. 1365). [See also: D. L. Croom, ‘VLF radiation from the high altitude nuclear explosions at Johnston Island, August 1958,’ J. Atm. Terr. Phys., vol. 27, p. 111 (1965).]

The expanding ionised (thus conductive, and hence diamagnetic) fireball excluded and thus ‘pushed out’ the Earth’s natural magnetic field as it expanded, an effect called ‘magnetohydrodynamic (MHD)-EMP’. But it was on the 9 July 1962, during the American Starfish shot, a 1.4 Mt warhead missile-carried to an altitude of 400 km, that EMP damage at over 1300 km east was seen, and the Starfish space burst EMP waveform was measured by Richard Wakefield. Cameras were used to photograph oscilloscope screens, showing the EMP pickup in small aerials. Neither Dr Bethe’s downward current model, nor the MHD-EMP model, explained the immense peak EMP. In 1963, Dr Conrad Longmire at Los Alamos argued that, in low-density air, electrons knocked from air molecules by gamma rays travel far enough to be greatly deflected by the earth’s magnetic dipole field. Longmire's theory is therefore called the 'magnetic dipole' EMP mechanism, to distinguish it from Bethe's 'electric dipole' mechanism.

[Illustration credit: Atomic Weapons Establishment, Aldermastion, http://www.awe.co.uk/main_site/scientific_and_technical/featured_areas/dpd/computational_physics/nuclear_effects_group/electromagnetic_pulse/index.html (this site page removed since accessed in 2006.]

[Illustration credit: Atomic Weapons Establishment, Aldermastion, http://www.awe.co.uk/main_site/scientific_and_technical/featured_areas/dpd/computational_physics/nuclear_effects_group/electromagnetic_pulse/index.html (this site page removed since accessed in 2006.]

Dr Longmire showed that the successive, sideways-deflected Compton-scattered electrons cause an electromagnetic field that adds up coherently (it travels in step with the gamma rays causing the Compton current), until ‘saturation’ is reached at ~ 60,000 v/m (when the strong field begins to attract electrons back to positive charges, preventing further increase). It is impossible to produce a 'magnetic dipole' EMP from a space burst which exceeds 65,000 v/m at the Earth's surface, no matter if it is a 10 Mt detonation at just 30 km altitude over the magnetic equator. The exact value of the saturation field depends on burst altitude. See pages 33 and 36 of Louis W. Seiler, Jr., A Calculational Model for High Altitude EMP, report AD-A009208, March 1975.

Many modern nuclear warheads with thin cases would produce weaker EMP, because of pre-ionisation of the atmosphere by x-rays released by the primary fission stage before the major gamma emission from the fission final stage of the weapon. An EMP cannot be produced efficiently in ionised (electrically conducting) air, as that literally shorts out the EMP very quickly. This means that many thermonuclear weapons with yields of around 100 kilotons would produce saturation electric fields on the ground of only 15,000-30,000 v/m if detonated in space. More about this, see Dr Michael Bernardin's testimony to the U.S. Congress: